Build reference atlas from scratch

[1]:

import os

os.chdir('../')

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

warnings.simplefilter(action='ignore', category=UserWarning)

[2]:

import scanpy as sc

import torch

import scarches as sca

from scarches.dataset.trvae.data_handling import remove_sparsity

import matplotlib.pyplot as plt

import numpy as np

import gdown

[3]:

sc.settings.set_figure_params(dpi=200, frameon=False)

sc.set_figure_params(dpi=200)

sc.set_figure_params(figsize=(4, 4))

torch.set_printoptions(precision=3, sci_mode=False, edgeitems=7)

Download raw Dataset

[4]:

url = 'https://drive.google.com/uc?id=1Vh6RpYkusbGIZQC8GMFe3OKVDk5PWEpC'

output = 'pbmc.h5ad'

gdown.download(url, output, quiet=False)

Downloading...

From: https://drive.google.com/uc?id=1Vh6RpYkusbGIZQC8GMFe3OKVDk5PWEpC

To: /home/marco/Documents/git_repos/scarches/pbmc.h5ad

2.06GB [00:18, 113MB/s]

[4]:

'pbmc.h5ad'

[5]:

adata = sc.read('pbmc.h5ad')

[6]:

adata.X = adata.layers["counts"].copy()

We now split the data into reference and query dataset to simulate the building process. Here we use the ‘10X’ batch as query data.

[7]:

target_conditions = ["10X"]

source_adata = adata[~adata.obs.study.isin(target_conditions)].copy()

target_adata = adata[adata.obs.study.isin(target_conditions)].copy()

print(source_adata)

print(target_adata)

AnnData object with n_obs × n_vars = 22779 × 12303

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue'

layers: 'counts'

AnnData object with n_obs × n_vars = 10727 × 12303

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue'

layers: 'counts'

For a better model performance it is necessary to select HVGs. We are doing this by applying the scanpy.pp function highly_variable_genes(). The n_top_genes is set to 2000 here. However, if you have more complicated datasets you might have to increase number of genes to capture more diversity in the data.

[8]:

source_adata.raw = source_adata

[9]:

source_adata

[9]:

AnnData object with n_obs × n_vars = 22779 × 12303

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue'

layers: 'counts'

[10]:

sc.pp.normalize_total(source_adata)

[11]:

sc.pp.log1p(source_adata)

[12]:

sc.pp.highly_variable_genes(

source_adata,

n_top_genes=2000,

batch_key="batch",

subset=True)

For consistency we set adata.X to be raw counts. In other datasets that may be already the case

[13]:

source_adata.X = source_adata.raw[:, source_adata.var_names].X

[14]:

source_adata

[14]:

AnnData object with n_obs × n_vars = 22779 × 2000

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue'

var: 'highly_variable', 'means', 'dispersions', 'dispersions_norm', 'highly_variable_nbatches', 'highly_variable_intersection'

uns: 'log1p', 'hvg'

layers: 'counts'

Create SCVI model and train it on reference dataset

Remember that the adata file has to have count data in adata.X for SCVI/SCANVI if not further specified.

[15]:

sca.models.SCVI.setup_anndata(source_adata, batch_key="batch")

INFO Using batches from adata.obs["batch"]

INFO No label_key inputted, assuming all cells have same label

INFO Using data from adata.X

INFO Computing library size prior per batch

INFO Successfully registered anndata object containing 22779 cells, 2000 vars, 9 batches,

1 labels, and 0 proteins. Also registered 0 extra categorical covariates and 0 extra

continuous covariates.

INFO Please do not further modify adata until model is trained.

Create the SCVI model instance with ZINB loss as default. Insert “gene_likelihood=’nb’,” to change the reconstruction loss to NB loss.

[16]:

vae = sca.models.SCVI(

source_adata,

n_layers=2,

encode_covariates=True,

deeply_inject_covariates=False,

use_layer_norm="both",

use_batch_norm="none",

)

[17]:

early_stopping_kwargs = {

"early_stopping_metric": "elbo",

"save_best_state_metric": "elbo",

"patience": 10,

"threshold": 0,

"reduce_lr_on_plateau": True,

"lr_patience": 8,

"lr_factor": 0.1,

}

vae.train(n_epochs=500, frequency=1, early_stopping_kwargs=early_stopping_kwargs)

INFO Training for 500 epochs

INFO KL warmup for 400 epochs

Training...: 19%|█▊ | 93/500 [02:04<09:08, 1.35s/it]INFO Reducing LR on epoch 93.

Training...: 27%|██▋ | 133/500 [02:58<08:23, 1.37s/it]INFO Reducing LR on epoch 133.

Training...: 38%|███▊ | 188/500 [04:13<06:59, 1.34s/it]INFO Reducing LR on epoch 188.

Training...: 40%|███▉ | 198/500 [04:26<06:41, 1.33s/it]INFO Reducing LR on epoch 198.

Training...: 42%|████▏ | 208/500 [04:39<06:31, 1.34s/it]INFO Reducing LR on epoch 208.

Training...: 42%|████▏ | 210/500 [04:42<06:25, 1.33s/it]INFO

Stopping early: no improvement of more than 0 nats in 10 epochs

INFO If the early stopping criterion is too strong, please instantiate it with different

parameters in the train method.

Training...: 42%|████▏ | 210/500 [04:43<06:32, 1.35s/it]

INFO Training is still in warming up phase. If your applications rely on the posterior

quality, consider training for more epochs or reducing the kl warmup.

INFO Training time: 201 s. / 500 epochs

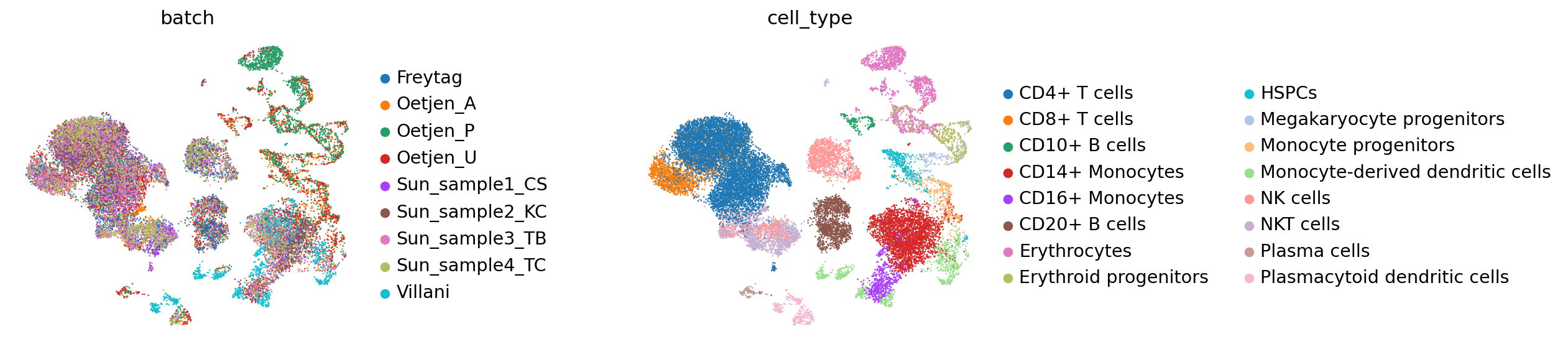

The resulting latent representation of the data can then be visualized with UMAP

[18]:

reference_latent = sc.AnnData(vae.get_latent_representation())

reference_latent.obs["cell_type"] = source_adata.obs["final_annotation"].tolist()

reference_latent.obs["batch"] = source_adata.obs["batch"].tolist()

[19]:

sc.pp.neighbors(reference_latent, n_neighbors=8)

sc.tl.leiden(reference_latent)

sc.tl.umap(reference_latent)

sc.pl.umap(reference_latent,

color=['batch', 'cell_type'],

frameon=False,

wspace=0.6,

)

... storing 'cell_type' as categorical

... storing 'batch' as categorical

After pretraining the model can be saved for later use or also be uploaded for other researchers with via Zenodo. For the second option please also have a look at the Zenodo notebook.

[20]:

ref_path = 'ref_model/'

vae.save(ref_path, overwrite=True)

Use pretrained reference model and apply surgery with a new query dataset to get a bigger reference atlas

[21]:

target_adata

[21]:

AnnData object with n_obs × n_vars = 10727 × 12303

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue'

layers: 'counts'

Since the model requires the datasets to have the same genes we also filter the query dataset to have the same genes as the reference dataset.

[22]:

target_adata = target_adata[:, source_adata.var_names]

target_adata

[22]:

View of AnnData object with n_obs × n_vars = 10727 × 2000

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue'

layers: 'counts'

We then can apply the model surgery with the new query dataset:

[23]:

model = sca.models.SCVI.load_query_data(

target_adata,

ref_path,

freeze_dropout = True,

)

Trying to set attribute `.uns` of view, copying.

INFO .obs[_scvi_labels] not found in target, assuming every cell is same category

INFO Using data from adata.X

INFO Computing library size prior per batch

INFO Registered keys:['X', 'batch_indices', 'local_l_mean', 'local_l_var', 'labels']

INFO Successfully registered anndata object containing 10727 cells, 2000 vars, 10

batches, 1 labels, and 0 proteins. Also registered 0 extra categorical covariates

and 0 extra continuous covariates.

[24]:

model.train(n_epochs=500, frequency=1, early_stopping_kwargs=early_stopping_kwargs, weight_decay=0)

INFO Training for 500 epochs

INFO KL warmup for 400 epochs

Training...: 12%|█▏ | 61/500 [00:33<04:03, 1.80it/s]INFO Reducing LR on epoch 61.

Training...: 14%|█▍ | 72/500 [00:39<03:52, 1.84it/s]INFO Reducing LR on epoch 72.

Training...: 15%|█▍ | 74/500 [00:40<03:52, 1.83it/s]INFO

Stopping early: no improvement of more than 0 nats in 10 epochs

INFO If the early stopping criterion is too strong, please instantiate it with different

parameters in the train method.

Training...: 15%|█▍ | 74/500 [00:41<03:58, 1.79it/s]

INFO Training is still in warming up phase. If your applications rely on the posterior

quality, consider training for more epochs or reducing the kl warmup.

INFO Training time: 26 s. / 500 epochs

[25]:

query_latent = sc.AnnData(model.get_latent_representation())

query_latent.obs['cell_type'] = target_adata.obs["final_annotation"].tolist()

query_latent.obs['batch'] = target_adata.obs["batch"].tolist()

[26]:

sc.pp.neighbors(query_latent)

sc.tl.leiden(query_latent)

sc.tl.umap(query_latent)

plt.figure()

sc.pl.umap(

query_latent,

color=["batch", "cell_type"],

frameon=False,

wspace=0.6,

)

... storing 'cell_type' as categorical

... storing 'batch' as categorical

<Figure size 320x320 with 0 Axes>

And again we can save or upload the retrained model for later use or additional extensions.

[27]:

surgery_path = 'surgery_model'

model.save(surgery_path, overwrite=True)

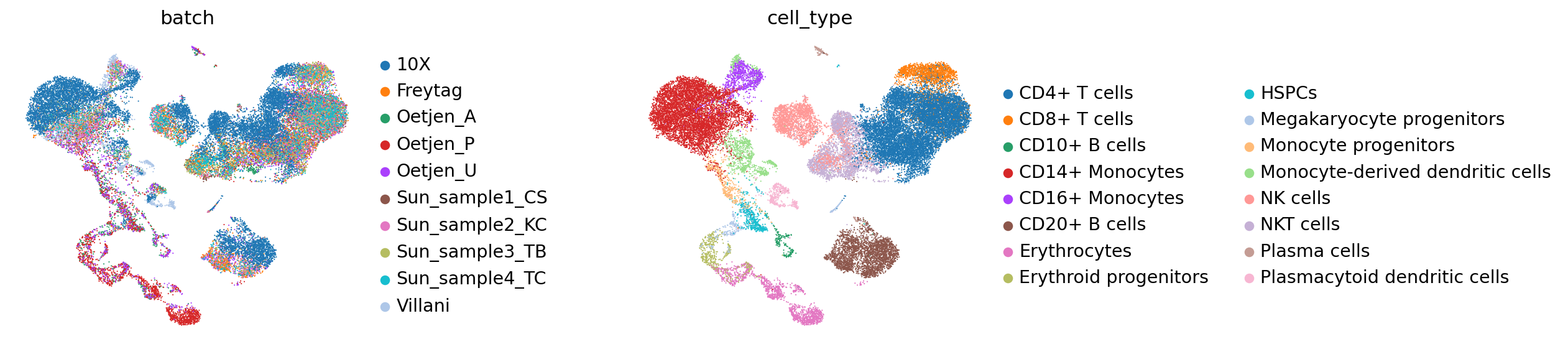

Get latent representation of reference + query dataset and compute UMAP

[28]:

adata_full = source_adata.concatenate(target_adata, batch_key="ref_query")

adata_full

[28]:

AnnData object with n_obs × n_vars = 33506 × 2000

obs: 'batch', 'chemistry', 'data_type', 'dpt_pseudotime', 'final_annotation', 'mt_frac', 'n_counts', 'n_genes', 'sample_ID', 'size_factors', 'species', 'study', 'tissue', '_scvi_batch', '_scvi_labels', '_scvi_local_l_mean', '_scvi_local_l_var', 'ref_query'

var: 'highly_variable-0', 'means-0', 'dispersions-0', 'dispersions_norm-0', 'highly_variable_nbatches-0', 'highly_variable_intersection-0'

layers: 'counts'

[29]:

full_latent = sc.AnnData(model.get_latent_representation(adata=adata_full))

full_latent.obs['cell_type'] = adata_full.obs["final_annotation"].tolist()

full_latent.obs['batch'] = adata_full.obs["batch"].tolist()

INFO Input adata not setup with scvi. attempting to transfer anndata setup

INFO Using data from adata.X

INFO Computing library size prior per batch

INFO Registered keys:['X', 'batch_indices', 'local_l_mean', 'local_l_var', 'labels']

INFO Successfully registered anndata object containing 33506 cells, 2000 vars, 10

batches, 1 labels, and 0 proteins. Also registered 0 extra categorical covariates

and 0 extra continuous covariates.

[30]:

sc.pp.neighbors(full_latent)

sc.tl.leiden(full_latent)

sc.tl.umap(full_latent)

plt.figure()

sc.pl.umap(

full_latent,

color=["batch", "cell_type"],

frameon=False,

wspace=0.6,

)

... storing 'cell_type' as categorical

... storing 'batch' as categorical

<Figure size 320x320 with 0 Axes>

[ ]: