Tutorial for mvTCR

In this tutorial, we create an atlas for tumor-infiltrating lymphocytes (TIL). The dataset is collected by Borcherding and contains ~750,000 samples from 11 cancer types and 6 tissue sources. In this example we use a subsampled version of Lung cancer specific studies. We use mvTCR to build the atlas and later integrate a heldout query into it. After the data integration, we use it to infer the tissue origin from the heldout dataset using a simple kNN trained on the learned latent representation.

mvTCR is a multi-modal generative integration method for transcriptome and T-cell receptor (TCR) data. For more information on mvTCR please refer to Drost 2022

Download dataset

First we download the mvTCR package and the preprocessed Tumor-infiltrating Lymphocyte (TIL) dataset. The unpreprocessed dataset can be downloaded at https://github.com/ncborcherding/utility

[1]:

%%capture

!pip install mvtcr

[2]:

import gdown

import os

url = 'https://drive.google.com/uc?id=1Lw9hytk3BiZ8aOucvnhRr9qyeMeNTs4v'

output = 'borcherding_subsampled.h5ad'

if not os.path.exists(output):

gdown.download(url, output, quiet=False)

Import libraries

[3]:

import torch

import scanpy as sc

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import f1_score

[4]:

%%capture

import scarches as sca

[5]:

%load_ext autoreload

%autoreload 2

[6]:

random_seed = 42

sca.models.mvTCR.utils_training.fix_seeds(random_seed)

Set hyperparameters

Here the hyperparameters can be set

[7]:

# We holdout two Cohorts out of 13

holdout_cohorts = ['GSE162500']

# maps each batch to an index, important is that the holdout cohorts are at the last positions

mapper = {'GSE176021': 0, 'GSE139555': 1, 'GSE154826': 2, 'GSE162500': 3}

[8]:

# Parameters for model and training

params_architecture = {'batch_size': 512,

'learning_rate': 0.0006664047426647477,

'loss_weights': [1.0, 0.016182440457269676, 1.0110670042409596e-10],

'joint': {'activation': 'leakyrelu',

'batch_norm': True,

'dropout': 0.05,

'hdim': 100,

'losses': ['MSE', 'CE'],

'num_layers': 2,

'shared_hidden': [100, 100],

'zdim': 50,

'c_embedding_dim': 20,

'use_embedding_for_cond': True,

'num_conditional_labels': 11,

'cond_dim': 20,

'cond_input': True},

'rna': {'activation': 'leakyrelu',

'batch_norm': True,

'dropout': 0.05,

'gene_hidden': [500, 500, 500],

'num_layers': 3,

'output_activation': 'linear',

'xdim': 5000},

'tcr': {'embedding_size': 64,

'num_heads': 4,

'forward_expansion': 4,

'encoding_layers': 1,

'decoding_layers': 1,

'dropout': 0.25,

'max_tcr_length': 30,

'num_seq_labels': 24}

}

params_experiment = {

'model_name': 'moe',

'n_epochs': 200,

'early_stop': 5,

'balanced_sampling': 'clonotype',

'kl_annealing_epochs': None,

'metadata': ['clonotype', 'Sample', 'Type', 'Tissue', 'Tissue+Type', 'functional.cluster'],

'save_path': 'saved_models',

'conditional': 'Cohort'

}

params_optimization = {

'name': 'pseudo_metric',

'prediction_labels':

{'clonotype': 1,

'Tissue+Type': 10}

}

Load Data

We load the data using scanpy

[9]:

adata = sc.read_h5ad('borcherding_subsampled.h5ad')

adata = adata[adata.obs['Cohort'].isin(list(mapper.keys()))]

adata = adata[adata.obs['Tissue']=='Lung']

adata.obs['Cohort_id'] = adata.obs['Cohort'].map(mapper)

adata.obs['Tissue+Type'] = [f'{tissue}.{type_}' for tissue, type_ in zip(adata.obs['Tissue'], adata.obs['Type'])]

metadata = ['Tissue', 'Type', 'Tissue+Type', 'functional.cluster', 'set', 'Cohort']

Train model on atlas dataset

The model is initialized and trained on the atlas dataset until convergence, for early stopping we subsample 20% from the training dataset as validation dataset

[10]:

# Split data into training and hold-out dataset

adata.obs['set'] = 'train'

adata.obs['set'][adata.obs['Cohort'].isin(holdout_cohorts)] = 'hold_out'

adata.obsm['Cohort'] = torch.nn.functional.one_hot(torch.tensor(adata.obs['Cohort_id'])).numpy()

# Stratified splitting of training into train and val. The val set is used for early stopping

adata_train = adata[~adata.obs['Cohort'].isin(holdout_cohorts)].copy()

adata_train.obsm['Cohort'] = torch.nn.functional.one_hot(torch.tensor(adata_train.obs['Cohort_id'])).numpy()

train, val = sca.models.mvTCR.utils_preprocessing.group_shuffle_split(adata_train, group_col='clonotype', val_split=0.2, random_seed=random_seed)

adata_train.obs.loc[val.obs.index, 'set'] = 'val'

[11]:

model = sca.models.mvTCR.models.mixture_modules.moe.MoEModel(adata_train, params_architecture, params_experiment['balanced_sampling'], params_experiment['metadata'],

params_experiment['conditional'], params_optimization)

[12]:

model.train(params_experiment['n_epochs'], params_architecture['batch_size'], params_architecture['learning_rate'],

params_architecture['loss_weights'], params_experiment['kl_annealing_epochs'],

params_experiment['early_stop'], params_experiment['save_path'])

46%|██████████████████████████████▏ | 93/200 [19:18<22:12, 12.45s/it]

Early stopped

Finetune model on query

To finetune the model, we load the pretrained model with best pseudo metric performance from the previous step. Then we initizalize additional embedding vectors for the query datasets and freeze all weights except the embedding layer, before further training on the query dataset

[13]:

# Separate holdout data and create a validation set (20%) for early stopping

adata_hold_out = adata[adata.obs['Cohort'].isin(holdout_cohorts)].copy()

adata_hold_out.obsm['Cohort'] = torch.nn.functional.one_hot(torch.tensor(adata_hold_out.obs['Cohort_id'])).numpy()

train, val = sca.models.mvTCR.utils_preprocessing.group_shuffle_split(adata_hold_out, group_col='clonotype', val_split=0.2, random_seed=random_seed)

adata_hold_out.obs['set'] = 'train'

adata_hold_out.obs.loc[val.obs.index, 'set'] = 'val'

[14]:

# Load pretrained model

model = sca.models.mvTCR.utils_training.load_model(adata_train, f'saved_models/best_model_by_metric.pt', base_path='.')

model.add_new_embeddings(len(holdout_cohorts)) # add new cond embeddings

model.freeze_all_weights_except_cond_embeddings()

model.change_adata(adata_hold_out) # change the adata to finetune on the holdout data

[15]:

# Finetune model

model.train(n_epochs=200, batch_size=params_architecture['batch_size'], learning_rate=params_architecture['learning_rate'],

loss_weights=params_architecture['loss_weights'], kl_annealing_epochs=None, early_stop=5,

save_path=f'saved_models/finetuning/', comet=None)

3%|█▉ | 6/200 [00:17<09:25, 2.91s/it]

Early stopped

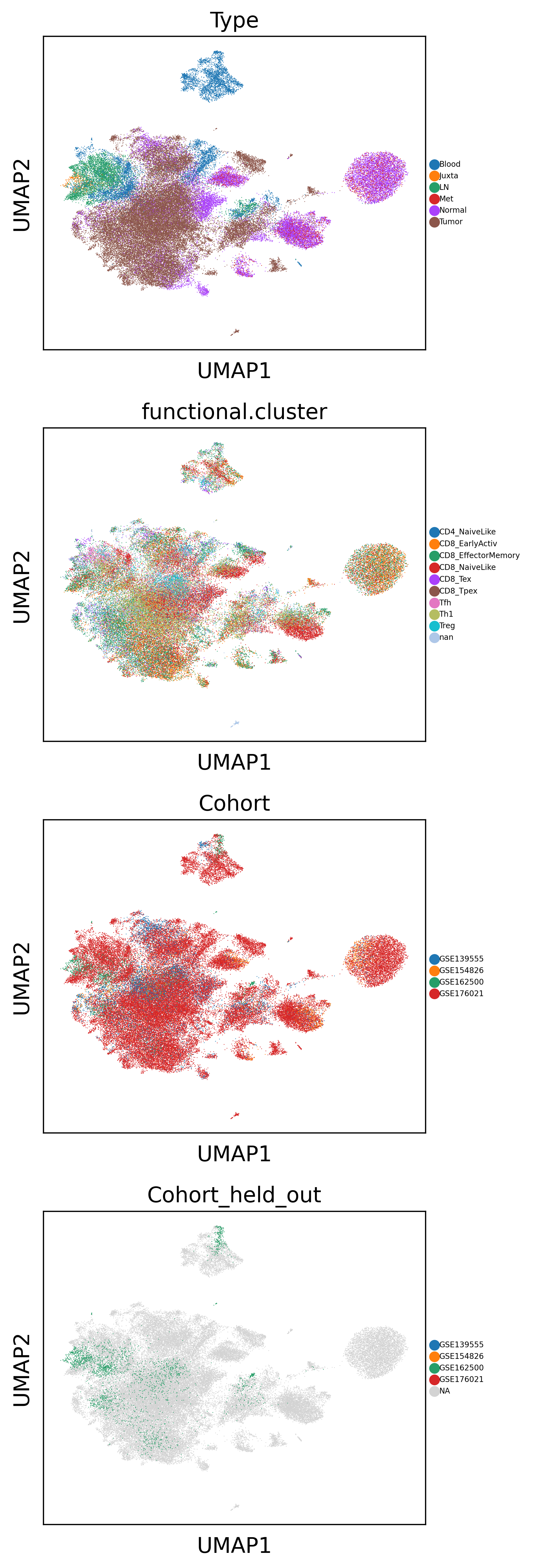

Get latent representation and visualize using UMAP

After finetuning, we take a first qualitative look on the latent representation by using UMAP to visualize them

[16]:

model = sca.models.mvTCR.utils_training.load_model(adata_hold_out, f'saved_models/finetuning/best_model_by_metric.pt', base_path='.')

[17]:

latent_adata = model.get_latent(adata, metadata=metadata, return_mean=True)

[18]:

# For visualization purpose

latent_adata.obs['Cohort_held_out'] = latent_adata.obs['Cohort'].copy()

latent_adata.obs.loc[~latent_adata.obs['Cohort'].isin(holdout_cohorts), 'Cohort_held_out'] = None

[19]:

sc.pp.neighbors(latent_adata)

sc.tl.umap(latent_adata)

[20]:

from matplotlib import rcParams

rcParams['figure.figsize'] = (4, 4)

sc.pl.umap(latent_adata, color=['Type', 'functional.cluster', 'Cohort', 'Cohort_held_out'], size=1, ncols=1, legend_fontsize=5)

Use latent representation for downstream task

We can now use the latent representation for downstream tasks, such as clustering, analysis or imputation. In this example we show how to use a simple kNN classifier to predict the cancer type of the holdout data.

[21]:

X_train = latent_adata[latent_adata.obs['set'] == 'train'].X

y_train = latent_adata[latent_adata.obs['set'] == 'train'].obs['Type']

X_test = latent_adata[latent_adata.obs['set'] == 'hold_out'].X

y_test = latent_adata[latent_adata.obs['set'] == 'hold_out'].obs['Type']

[22]:

classifier = KNeighborsClassifier(n_neighbors=5, weights='distance', n_jobs=-1)

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test)

[23]:

print(f'F1-score for predicting Sample Origin: {f1_score(y_test, y_pred, average="weighted"):.3}')

F1-score for predicting Sample Origin: 0.744